Overview

In recent years, large language models have achieved significant success in generative tasks (e.g., speech cloning and audio generation) related to speech, audio, music, and other signal domains. A crucial element of these models is the discrete acoustic codecs, which serve as an intermediate representation replacing the mel-spectrogram. However, there exist several gaps between discrete codecs and downstream speech language models. Specifically, 1) Due to the reconstruction paradigm of the Codec model and the structure of residual vector quantization, the initial channel of the codebooks contains excessive information, making it challenging to directly generate acoustic tokens from weakly supervised signals such as text in downstream tasks. 2) Achieving good reconstruction performance requires the utilization of numerous codebooks, which increases the burden on downstream speech language models. Consequently, leveraging the characteristics of speech language models, we propose Language-Codec. In the Language-Codec, we introduce a Masked Channel Residual Vector Quantization (MCRVQ) mechanism along with improved fourier transform structures and attention blocks, refined discriminator design to address the aforementioned gaps. We compare our method with competing audio compression algorithms and observe significant outperformance across extensive evaluations. Furthermore, we also validate the efficiency of the Language-Codec on downstream speech language models.

Model Architecture

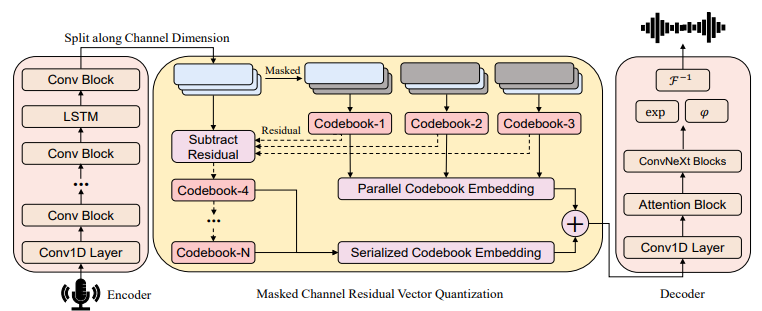

The overall architecture for Language-Codec. On the far left is the encoder downsampling module, which still utilizes the model structure of Encodec. On the far right is the decoder upsampling module, where we have replaced it with Vocos' model structure. The middle part is the Masked Channel Residual Vector Quantization module, with the gray blocks indicating the masked portion of temporal information. The dashed lines within the MCRVQ module indicate that the corresponding representations exhibit a decrease in residual values.

|

Figure.1 The overall architecture of Language-Codec.

Experiments

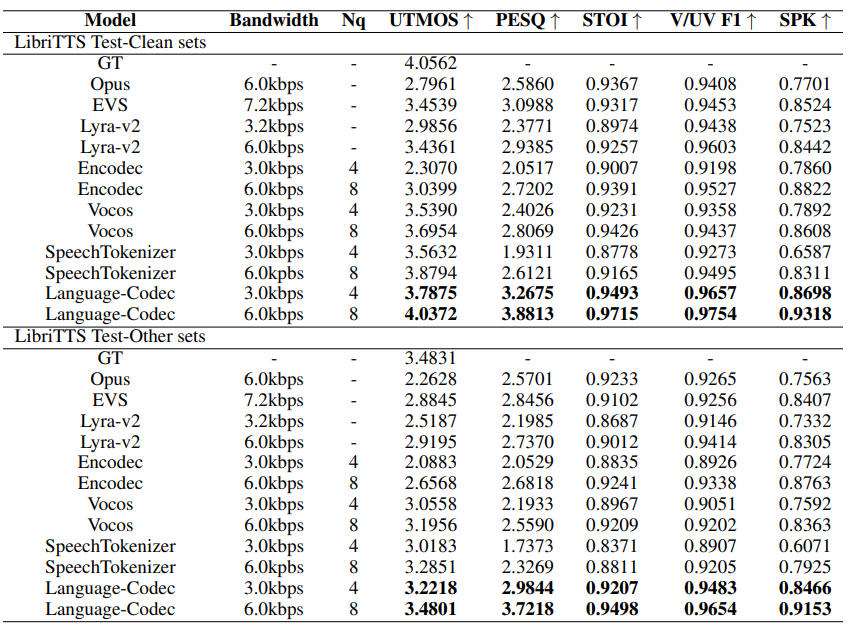

We evaluated the performance of the codec model on the test set of LibriTTS. The Test-Clean collection consists of 4,837 audio samples, while the Test-Other collection, which mostly contains audio recorded in noisy environments, comprises a total of 5,120 audio samples. Considering that the primary purpose of the discrete codecs is to serve as an audio representation for downstream tasks, excessive channel numbers would significantly burden downstream speech language models. Therefore, we conducted a comparison between four-channel and eight-channel dimensions. Among the objective metrics we employed, UTMOS and speaker similarity metrics closely approximate the subjective perception of human listeners. On the other hand, PESQ, STOI, and F1 metrics are more indicative of the inherent quality of the audio signal. Due to the subtle differences in UTMOS, we will highlight the top two models with the highest UTMOS scores for each channel. As for the remaining objective audio quality metrics, we will only highlight the highest-performing model. The experimental results are shown in Table 1.

|

Table.1 The results of different codec models on the LibriTTS Test-Clean and Test-Other dataset.

Compare with others

We provide ten sets of audio to compare the effects of languagecodec and other models.

1.He felt above him the vast indifferent dome and the calm processes of the heavenly bodies; and the earth beneath him, the earth that had borne him, had taken him to her breast.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

2.Such is their ponderous weight that they cannot rise from the horizon; but, obeying an impulse from higher currents, their dense consistency slowly yields.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

3.Now let the devils strike our scent!” said the scout, tearing two rifles, with all their attendant accouterments, from beneath a bush, and flourishing “killdeer” as he handed Uncas his weapon; “two, at least, will find it to their deaths.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

4.Well, if there is nothing to be learned here, we had best go inside.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

5.He grasped his hoe and started briskly to work.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

6.Critias when he told this tale of the olden time, was ninety years old, I being not more than ten.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

7.Stung by anxiety for this little sister, she upbraided Miss W— for her fancied indifference to Anne’s state of health.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

8.It’s delightful to hear it in a London theatre.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

9.The carey housewarming.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

10.This was at the March election, eighteen fifty five.

| GT | Opus(6.0kbps) | EVS(7.2kbps) | Lyra-v2(6.0kbps) | Encodec(6.0kbps) | SpeechTokenizer(6.0kbps) | LanguageCodec(6.0kbps) |

|---|---|---|---|---|---|---|

More Audios

Here, we provide more audio samples to demonstrate the differences between languagecodec and GT.

1.Let me not survive my disgrace!

| GT | LanguageCodec(6.0kbps) |

|---|---|

2.Dr rohlfs writes to me that he found the mixed races in the great sahara, derived from arabs, berbers, and negroes of three tribes, extraordinarily fertile.

| GT | LanguageCodec(6.0kbps) |

|---|---|

3.”how long ago were you arrested?” asked beth.

| GT | LanguageCodec(6.0kbps) |

|---|---|

4.Then i fell down at tom temple’s feet.

| GT | LanguageCodec(6.0kbps) |

|---|---|

5.he’s better now.

| GT | LanguageCodec(6.0kbps) |

|---|---|

6.Conclusion.

| GT | LanguageCodec(6.0kbps) |

|---|---|

7.Was the young lady naomi colebrook?

| GT | LanguageCodec(6.0kbps) |

|---|---|

8.The mill was at the outskirts of the town.

| GT | LanguageCodec(6.0kbps) |

|---|---|

9.There was but one door to the house.

| GT | LanguageCodec(6.0kbps) |

|---|---|

10.all my men put their hands to their mouths and shouted.

| GT | LanguageCodec(6.0kbps) |

|---|---|

11.yes; she’ll worry about me, i know.

| GT | LanguageCodec(6.0kbps) |

|---|---|

12.and where is he staying?

| GT | LanguageCodec(6.0kbps) |

|---|---|

13.his trial has not yet taken place, and instead of your devoting considerable of your valuable time appearing against him it would be much simpler to settle the matter right here and now.

| GT | LanguageCodec(6.0kbps) |

|---|---|

14.that?

| GT | LanguageCodec(6.0kbps) |

|---|---|

15.At the turn of the road he ran up against the tanner’s boy, lars.

| GT | LanguageCodec(6.0kbps) |

|---|---|

16.’i take that for granted: in the nature of things it can hardly be otherwise,’ i replied, a good deal startled and perplexed by the curious audacity of her interrogatory.

| GT | LanguageCodec(6.0kbps) |

|---|---|

17.We will make a little tour together, when all this shall have blown over, in a few weeks, and choose our retreat; and with the winter’s snow we’ll vanish from brandon, and appear with the early flowers at our cottage among the beautiful woods and hills of wales.

| GT | LanguageCodec(6.0kbps) |

|---|---|

18.excuse me, please.

| GT | LanguageCodec(6.0kbps) |

|---|---|

19.I’ve heard of your coming here, and sending, so often.

| GT | LanguageCodec(6.0kbps) |

|---|---|

20.yes, sir, i plead guilty, although i’ve been told i ought not to confess.

| GT | LanguageCodec(6.0kbps) |

|---|---|